This week, we have an article from The New Stack on mastering the skills necessary to become an API security expert and another fine read from Dana Epp on the applicability of artificial intelligence (AI) in API defense and attack. We also have an article from Forbes on building cross-functional API teams and, finally, a review of two popular, modern static application security (SAST) tools.

For the readers fortunate enough to have attended Infosecurity 2023 who made an effort to come and say hello, I appreciate your support and feedback.

Article: Becoming an API security expert

First up this week, we have an article from The New Stack on how to master the skills necessary to become an API security expert, primarily how to think like an attacker. The author believes that whilst many security professionals will have adequate knowledge of their API inventory, their changes in the threat landscape, and their potential for exposure, they are limited in their effectiveness if they cannot think like an attacker. Only by thinking like an attacker can the defender anticipate the tactics and techniques likely to be used and thereby be able to incorporate the appropriate countermeasures and defenses.

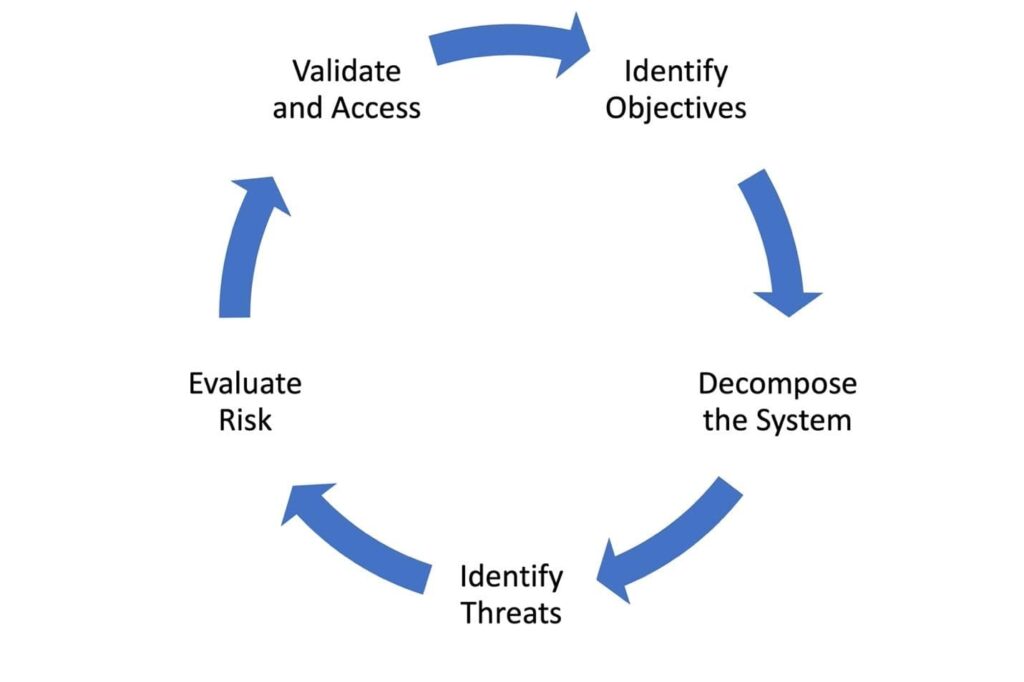

The author recommends security professionals learn the technique of threat modeling to understand their threat environment. A standard threat modeling lifecycle is shown below; this consists of the following stages: setting objectives, decomposition, threat identification, risk evaluation, and finally, assessment.

The author suggests using two popular threat modeling methodologies: Microsoft’s STRIDE methodology or their DREAD methodology. The two are highlighted in tabular form:

| Category | Impact on scoring system | |

| S | Spoofing | Impersonation of a valid user or resource to gain unauthorized access. |

| T | Tampering | Modification of system or user data with or without detection. |

| R | Repudiation | Execution of an untrusted or illegal operation in an untraceable way. |

| I | Information Disclosure | Exfiltration of private and/or business-critical information. |

| D | Denial of Service | Attack on a system that leaves it temporarily unavailable or unusable. |

| E | Elevation of Privilege | Tactic where an attacker gains privileged access to a system with the ability to compromise or destroy it. |

| Category | Impact on scoring system | |

| D | Damage | How bad would an attack be? |

| R | Reproducibility | Can an attack be easily reproduced? |

| E | Exploitability | How easy is it to mount a successful attack? |

| A | Affected Users | How many users are impacted? |

| D | Discoverability | What is the likelihood of this threat being discovered? |

To use the threat models, the security team performs an analysis of their system, asking the following four key questions (from the Threat Modeling Manifesto):

- What are we working on? This involves defining the scope of their system and its components and environment.

- How can it go wrong? Identify the threats to the system.

- What are we going to do about it? Define the countermeasures, controls, and defenses.

- Did we do a good enough job? Did the countermeasures work as intended?

I strongly advocate for threat modeling and believe it has great potential with application to API security. Although this article only scratches the surface, it is encouraging to see the topic being discussed.

Article: AI for API hackers

Next up is another great read from Dana Epp, focusing on the impacts of Artificial Intelligence (AI) on API hackers. Although oriented toward API hackers and attackers, it is a great read on understanding the impact of AI on security in general. Being late to the game myself, I found this a fascinating read.

Dana’s first observation is a general note of caution for organizations using AI (typically ChatGPT or BingChat) in their development process. Firstly he highlights the example where Amazon has warned staff not to use ChatGPT for coding assistance in case proprietary source code lands in the hands of a close competitor. Secondly, there is a concern that GitHub Copilot has the potential to leak closed-source intellectual property even if the underlying repositories are set to private accesses. This is a function of how Copilot analyses and stores code snippets, and there are already examples of copyrighted code being leaked in AI-generated code. Thirdly, AI-generated code could itself be malicious and should not be trusted by default.

Dana takes a look at the various ways in which AI can be used in a defensive context that are currently in the market; these include the following solutions:

- Anomaly detection systems that use traffic monitoring to detect deviation from baselines.

- Profiling API users and clients to detect abnormal behavior patterns.

- Performing content analysis to inspect API payloads for malicious code or data leakage.

- Automatic blocking for responses based on the detection of malicious requests or responses.

He then speculates on some possible future defensive solutions, including:

- Dynamic adaptation to changing threats and environments allows for automatic rule updates.

- Defensive solutions can potentially scale across very large networks and volumes of data without compromising performance or accuracy.

- Defensive solutions can anticipate potential attacks and act proactively to defend the target.

Dana concludes with potential ways in which AI can, in turn, be used to thwart some of the new defensive capabilities. The use of AI for both attack and defense is likely to rapidly evolve into a cat-and-mouse game — certainly, the defense has first mover advantage. Still, the widespread availability of powerful AI tools will soon place powerful capabilities in the hands of attackers.

Article: Building API cross-functional teams

The next article is an article courtesy of Forbes on the importance of cross-functional teams for API development. The report reveals the staggering scale of the global API management market value, which is expected to reach $8.36 billion by 2028. However, the author cautions that a more mature process needs to be applied to the API creation process, including creating more roles across the ecosystem.

The key takeaway from the report is how cross-functional teams help deliver the promises of the API economy. The author suggests that whilst the API economy has the potential to unlock great value, many organizations have yet to see the real return on investment and value. Specifically, they identify four primary benefits to a more diverse API ecosystem, namely:

- Better API design: good design ensures that APIs are secure, scalable, and aligned to business objectives.

- Improved API adoption: including stakeholders in the process can increase API adoption and drive usage.

- More effective API monetization: a more diverse team can result in greater insights into how to monetize APIs.

- Enhanced API security: most important for our readers is the positive impact that diverse teams can have on improving API security. Diversity in team experience can lead to a more holistic approach to security, from defensive techniques to protection against threats. When growing your API security team, always select a diversity of skills and backgrounds, and be sure you create a collaborative culture within the team.

The report identifies eight key personas are focus areas for enablement:

- Providers: focus on frictionless and automated planning, designing, building, and running of APIs.

- Consumers: focus on ease of discovery and consumption.

- API product managers and business analysts: responsible for aligning the API to the business objectives.

- API platform team: responsible for building and scaling a well-governed API program.

- Support and DevOps: monitor APIs to enhance reliability and stability.

- Governance and security teams: ensure that APIs are compliant and secure.

- Orchestration teams: integrate APIs with the wider IT landscape.

- Leadership stakeholders: focuses on the maturity, value, and ROI of APIs.

It’s always interesting to read views on the business of APIs, and it is encouraging to see the increasing focus on API security as a core element of a mature strategy.

Article: Deep dive on modern SAST tools

Finally, this week, we have a deep dive into modern static application security testing (SAST) tools. I always advocate for using SAST to establish a baseline software security program since the last decade has seen dramatic improvements in the capabilities of SAST tools. In particular great strides have been made in the performance (execution times) and accuracy (false positive and false negative rates) of these tools. This article looks at two of the most popular low-cost (and, in some situations, totally free) tools on the market: GitHub’s CodeQL and R2C’s Semgrep.

The author provides a detailed analysis based on the following criteria: licensing terms and conditions, tooling support (typically IDE and CI/CD integrations), and language support. Although the author does not select an outright winner, both tools offer great opportunities for security teams to improve the security posture of their codebases. Ultimately the final choice will be dictated by the languages used and the deployment environments.

It’s always good to see coverage on SAST tools — if you establish an API security practice, you would be well advised to consider these two fine products.

Event: apidays Interface 2023

I will be speaking at the upcoming apidays Interface 2023 online event on the topic of – you guessed it – the recent changes to the OWASP API Security Top 10 recently announced. I’ll focus on the new flaw categories introduced, particularly looking at real-world examples of these flaws resulting in vulnerabilities.

Join me (and several of our other frequent contributors to the newsletter) on the 28th and 29th June 2023, as we cover everything relating to APIs, including security.

Get API Security news directly in your Inbox.

By clicking Subscribe you agree to our Data Policy